The first thing you have to understand when looking at Mercurial is what a Distributed Version Control System (DVCS) is, how it's different then things like Subversion or CVS, and why you would want to use it. So, let me start with a brief answer to those questions.

What and Why

Centralized version control systems like CVS, Subversion, Perforce, and the like operate by having a centralized repository server. Then each client does a checkout of the code from the centralized repository, makes changes, and commits their changes back to the server. A DVCS like Mercurial and Git differ from these in two main ways.

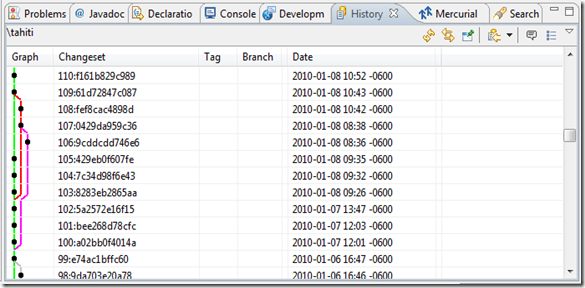

One, when a person wants to get the code form a repository, they don't just checkout the latest version. They actually copy (or clone) the entire repository. They actually pull down every revision of every file in the repo. This only has to be done once, from then on they just have to do an update to pull down any new changes to the repo. Then the person works with their local repo until they are ready to push their changes back to the main repo.

Two, the fact that everyone has a full copy of the repo means this is more of a peer-to-peer technology than a client-server technology. That is, with centralized version control it is clear that the server is the master repo, since it alone holds all the data. But with DVCS everyone holds all the data, so the only thing that makes one repo the master repo is that everyone working on the project decided it would be the master repo. But there is technically no difference between the master repo and all the cloned repos on other peoples machines. We'll talk about the effects of this more as we continue, but lets move on for now.

So, why would you want to use a DVCS? One of the things that I like about it is since it's peer-to-peer there's no server to run. If you want fancy things like web access to a repository, then you'll have to run some kind of server, but if all you want is a repository on your local machine or on your network, then all you need is a directory. All of the logic for a DVCS is in the program that runs on the client. So if you're working on something alone, but you want version control, DVCS is the easiest thing to setup. And if your on a large team of developers, it just so happens that DVCS will work really well for that, too.

With DVCS merging just becomes a way of life. But because of the way it works, merging is usually a lot easier than it can be with centralized version control systems. Also, since each peer has a full copy of the repository, it's like a bunch of automatic backups of your repo. Server crashed? Can't get your main repository back? Not a problem! Just have one of the peers copy their repository to a central location, and have everyone start pushing their changes to it.

Before I started using Mercurial I had heard a lot about DVCS and never understood why everyone thought it was so great. Now I've been using Mercurial for a couple months and there is no way I would ever want to use something like Subversion or CVS again. It is so much faster and better to work with. If you'd like to read more about why DVCS, check out Chapter 1 of Mercurials' definitive guide called How did we get here?

Mercurial vs GIT

Before I say anything on this, let me say that I agree with many others who have said that the important thing is moving from centralized version control to distributed version control. Deciding which DVCS to use is really just a matter of preference. Bot Mercurial and Git are great.

So, why did I go with Mercurial? To be quite honest it's mostly because I tried to get Git working on my machine once, and couldn't figure it out (probably because I didn't understand it). Then, a while later I tried to get Mercurial working, and got it working right away. I'm not sure if my problems with Git were normal, or just plain ignorance on my part, but the end result was I just stuck with Mercurial since then.

Git does seem to have more momentum around the development community, and it looks as though Eclipse might be embracing it as the sanctioned DVCS for Eclipse. But right now the Mercurial plugin for Eclipse seems much farther along that the one for Git. Also, the support for Git on Windows is fare behind that of Mercurial, and with the 139 Git commands it seems quite a bit more complex.

On a very technical side, the Mercurial definitive guide points this out, and I'm sure this is totally unbiased ;)

While a Mercurial repository needs no maintenance, a Git repository requires frequent manual “repacks” of its metadata. Without these, performance degrades, while space usage grows rapidly. A server that contains many Git repositories that are not rigorously and frequently repacked will become heavily disk-bound during backups, and there have been instances of daily backups taking far longer than 24 hours as a result. A freshly packed Git repository is slightly smaller than a Mercurial repository, but an unpacked repository is several orders of magnitude larger.

But, as I said, the important thing is moving to DVCS, not which one you choose.

Change Sets

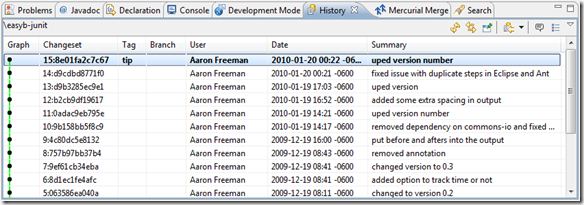

One of the most important concepts with Mercurial is the Change Set. A change set is a set of files who's modifications where committed to the repo all at once with a commit command. Every change set has a globally unique identifier assigned to it so that Mercurial can always tell one change set from another no matter what machine it came from.

A change set usually has one parent, but will have two when you are doing a merge. A change set can never have more than two parents, though, which helps to limit the complexity of branches and merges in the version tree.

Commands

You use the clone command to clone a repository from the main location like this:

hg clone http://mysite.com/myRepo [destination folder name]

Once you have done this you now have a full copy of the repository. Under the folder you specified there will be a .hg folder. This folder contains all the repo history and information. Everything else under the main folder is called the working directory. (If you want to disconnect the folder from the Mercurial repo, you just have to delete the .hg folder.)

Once you have made some changes you execute the commit command to commit them to your local repository (this creates a change set). Then, once you are ready to share everything with the rest of your team, you do a push. This will push all of the change sets you have committed to your repo out to the main repo that you cloned from.

In order to update your repo with other people's changes you do a pull. This will pull in all change sets in the main repo that you do not have in your local repo. It's important to remember that this will not update your working directory. All this does is pull change sets into the .hg folder. In order to update your working directory to the latest version, you have to do an update.

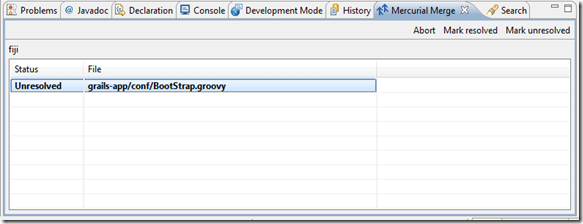

If you do an update and the change sets you pulled are not descendants of the change set in your working directory, the update will fail, and it will tell you that you have to do a merge. Doing the merge creates another change set that represents the merging of two change sets. You now commit the merge and push the new change set back to the main repo.

That's the basics of how Mercurial works, but there's a lot more to learn. I recommend reading Mercurial: The Definitive Guide if you want to know more.

My next Mercurial post will be about using with Eclipse.